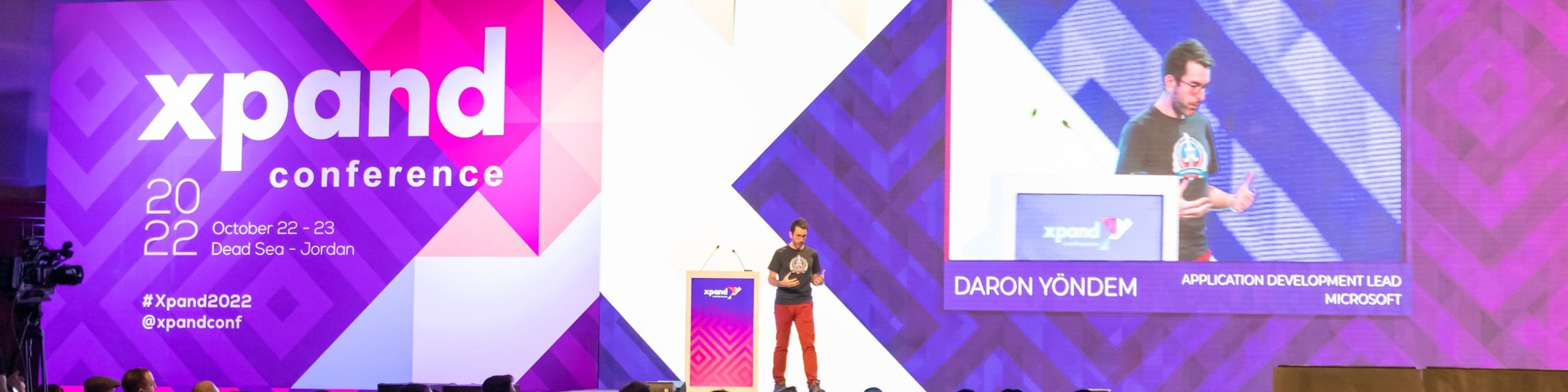

Daron Yöndem

Microsoft - Tech Lead

Istanbul, Turkey

Actions

Daron Yöndem, with over 20 years in the tech industry, currently serves as a tech lead at Microsoft, focusing on Azure AI applications across 109 countries spanning Central and Eastern Europe, Middle East and Africa. His expertise spans solution architecture, SaaS development, and cloud-native architecture. Before joining Microsoft, Daron has founded a company and served as CTO for two startups, specializing in building SaaS products. He is also an author, with publications including a book on nosql databases. Educationally, Daron holds a Master of Science in Computer Science, a Master of Arts in Organizational Leadership, and is currently pursuing a PhD in Information Systems Leadership Studies. His commitment to sharing knowledge is evident through his participation as a speaker at international tech conferences.

Links

Area of Expertise

Topics

Mastering Prompt Engineering Techniques

This session provides an in-depth exploration of prompt engineering techniques with recent large language models. A comprehensive walkthrough of various prompt engineering strategies are presented demonstrating how to optimize the model's responses and improve its performance in different tasks.

The session begins with an understanding of the distinction between base Large Language Models (LLMs) and instruction-tuned LLMs. It then moves on to discuss techniques that guide the model's responses, such as controlling temperature and providing explicit instructions. A significant part of the session is dedicated to strategies for handling potential model errors. This includes the use of self-check mechanisms and 'disallow' lists. We also introduce the audience to advanced techniques like 'few-shot prompting', 'self-consistency', 'tree of doubts', 'retrieval augmented generation', and 'jailbreaking'. Practical examples are provided throughout the session, demonstrating how these techniques can be applied in tasks like sentiment analysis, summarization, entity extraction, translation, and more.

Attendees will leave the session equipped with practical knowledge and insights that can be directly applied to enhance their work with large language models. This session is a must-attend for AI practitioners, developers, data scientists, and anyone interested in leveraging the power of LLMs for various applications.

Meet my AI Sidekick!

It has been a long time since we have access to LLMs. I have been playing with it not only for customers but for myself as well. How could I increase my own productivity? What value could I get out of it for myself? In this session, I will share with you what I have learned, what I did, what workflows did stick and how I'm using LLMs day to day in my life.

I've been diving deep into LLMs potential, not just to level up customer experiences, but also to supercharge my own productivity. I've been asking myself: How can I unlock the full potential of this tech to boost my own efficiency and what unique value can it bring to my daily grind?

In this session, I'm stoked to share my discoveries with you. I'll unpack the insights I've gained, the tactics I've deployed, and the workflows that have really stuck. Plus, I'll share how I'm integrating LLMs into my day-to-day routine.

So, come join me on this journey as we navigate the exciting landscape of this technology. Who knows, you might just find fresh ways to turbocharge your own productivity.

Building Agentic LLM Workflows with AutoGen

In the era of artificial intelligence, Large Language Models (LLMs) have catalyzed a transformative shift across multiple domains, heralding a new age of computational ingenuity and automation. At the forefront of this evolution stands AutoGen, a framework designed to optimize, automate, and simplify agentic LLM workflows through the deployment of customizable and conversable agents. This session delves into the cutting-edge domain of Agentic LLM Workflows with AutoGen, exploring its capacity to not only leverage the advanced capabilities of LLMs but also to transcend their limitations by fostering synergistic interactions between agents, humans, and tools.

AutoGen introduces a paradigm shift in workflow automation, offering a seamless interface for the orchestration of multi-agent conversations that underpin complex task executions. The framework is distinguished by its ability to facilitate the training of LLM agents via AgentOptimizer, a novel class that iteratively enhances agent functions based on historical performances, thereby fine-tuning their skills in real-time applications.

Participants will gain insights into AutoGen's versatile architecture, which allows for the agile development of LLM applications by enabling agents to converse, learn, and collaborate effectively. The session will cover practical examples, demonstrating how AutoGen significantly reduces both the manual effort and coding complexity traditionally associated with creating intricate LLM-based applications, thereby accelerating the development cycle and enhancing the efficacy of the outcomes.

This presentation aims to furnish attendees with a comprehensive understanding of AutoGen's innovative approach to LLM workflow automation. It will highlight how the framework's agentic workflows and AutoGen's capabilities can be harnessed to foster a new generation of intelligent applications that are more adaptable, efficient, and capable of executing complex tasks with unprecedented ease and precision.

Join me to explore the revolutionary potential of AutoGen in advancing the capabilities of LLM workflows and setting new benchmarks in the field of artificial intelligence and machine learning.

Living on an End-to-End Serverless Architecture on Azure

Hate servers and provisioning? Join me in this session to work on achieving the serverless Nirvana, end-to-end serverless architecture for your next application. We will discuss serverless compute options, serverless database choices, serverless eventing, serverless security, and more! We will talk about scaling and billing. Join the discussion!

Discover Serverless Data for Serverless Apps

Serverless is all the hype. We walk about Serverless applications but rarely look into our data services options. Building a full-on serverless architecture requires all layers to conform to our serverless world. In this session, we will look at data services in Azure that are Serverless in their nature, or can be serverless with the right implementation.

What comes after your first function in Serverless?

FAAS is the Nirvana of PAAS. You can spin out functions with HTTP Endpoint, expose indefinitely scalable APIs in a couple of seconds. Doesn’t that sound too good to be true? How do you do versioning, routing of your APIs? What if you need a stateful function? What are the right tools to do eventing between your functions and the platform where they live? What if you wanted to orchestrate your functions like you do with your containers? Finally, what about a scalable PAAS database to back all your functions? In this fast-paced session, we will try to answer all these questions.

Function Orchestration in the world of Serverless

You have a function, or two, or maybe more? Now is the time to talk about making sure you have full control on how data and communication flows between your functions. You need something to control how your functions interact to each other. You need something that can give you granular control, or sometimes maybe a simple user interface to get it done. This session is about your options in Azure to accomplish all that. We will look into Durable Functions and Logic Apps to understand where they can help us scaling our serverless move.

Global Azure 2022 Sessionize Event

Update Conference Prague 2021 Sessionize Event

Azure Community Conference 2021 Sessionize Event

Global Azure 2021 Sessionize Event

AzConf Sessionize Event

MVP Days Israel 2020 Sessionize Event

Global Azure Virtual Sessionize Event

Global AI Community - On virtual tour Sessionize Event

Daron Yöndem

Microsoft - Tech Lead

Istanbul, Turkey

Links

Actions

Please note that Sessionize is not responsible for the accuracy or validity of the data provided by speakers. If you suspect this profile to be fake or spam, please let us know.

Jump to top