To get the most out of your event, both for your audience and yourself, you need an efficient way to determine which submitted sessions are the best fit for it. Sessionize offers an intuitive session evaluation system built for that exact purpose, which you can use by setting up an evaluation plan. Each evaluation plan can be created by using one of three evaluation modes - that is, three different ways for your evaluators to rate the submitted sessions:

- Stars rating evaluation mode

- Yes/No evaluation mode

- Comparison evaluation mode

You can handle your entire event with a single evaluation plan, or you can combine an unlimited number of evaluation plans for a more refined session evaluation. For example, you can set up a plan to evaluate only the sessions belonging to a specific track, organize multiple rounds of evaluations, and so on. The latter can be particularly useful for events with many submissions.

If you need help setting up your first evaluation plan and adding your team members as evaluators, check out the following step-by-step guide: Evaluations: From start to finish.

Here's an overview of all three evaluation modes offered by Sessionize, so you can get a better idea of which one would suit you best.

Stars rating evaluation mode

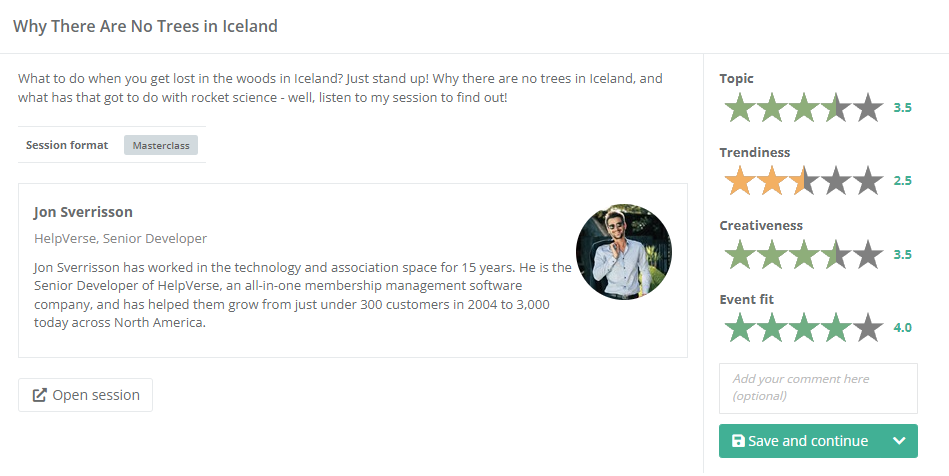

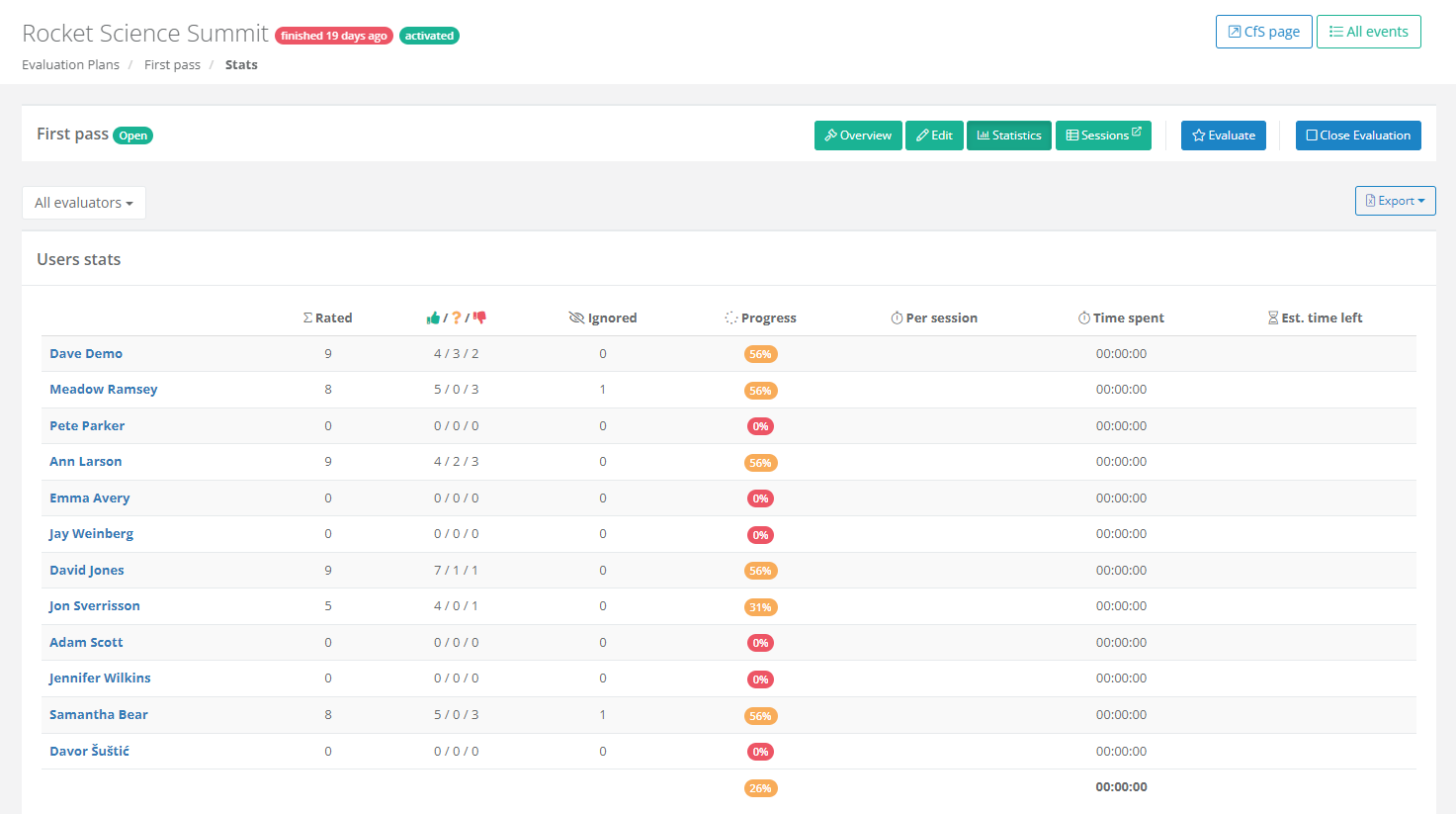

The Stars rating evaluation mode is possibly the most straightforward one. Your evaluators simply have to go through every session and rate it with 1-5 stars. Half-star ratings are also supported. Once they do that, you'll have a clear overview of which sessions have the highest rating and, as such, are the best choice for your event.

For a more elaborate approach to the stars rating, you can click on the Use multiple criteria option and assign additional criteria for session evaluation. You're free to decide which criteria you want your evaluators to use. and how much percentile weight each criterion has in the final stars rating of the session. A minimum of two are required, and their total number is unlimited. Should you require more than four, simply edit the evaluation plan and save changes after adding each additional criterion field.

Do consider that adding multiple criteria to your stars rating evaluation plan can heavily affect the time needed to rate all of your submissions. This evaluation mode generally works best for events that don't have a huge number of submissions.

Yes/No evaluation mode

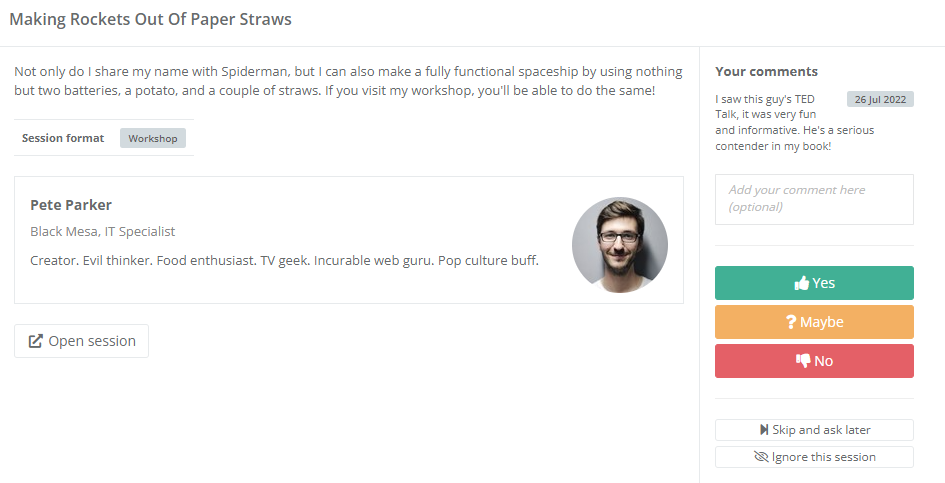

The Yes/No evaluation mode has your evaluators go through the submitted sessions one by one and pick between three options: Yes, No, or Maybe. They can also ignore the session entirely, should for any reason they feel unable to rate it properly.

The Yes/No evaluation mode can be used to great effect for the initial screening of the sessions submitted for your event, as you'll be able to quickly eliminate those that don't fit your event at all. Then you can evaluate the remaining sessions with one of the other two evaluation modes. Of course, nothing's stopping you from using the Yes/No evaluation mode as the backbone of your entire event without combining it with other evaluation modes.

Comparison evaluation mode

The Comparison evaluation mode is the one we're particularly proud of because it's built around the famous Elo rating system, an advanced method for calculating the relative skill levels of players in games such as chess. When applied to your event's sessions, this method will have you rank different sets of three sessions, and the system will calculate your best options faster and more conveniently than any other method of evaluation.

The Elo rating algorithm explained

We use the Elo rating system, a chess-rating system also used for multiplayer competitions in a number of video games, sports, board games, etc. You can read more about it on Wikipedia.

In the beginning, each session gets a 1500 rating.

A comparison starts with choosing a triple that will be compared. The first batch of triplets is chosen randomly until all sessions are compared at least once. Then things get smarter. We look at comparison count, win count, and rating difference to pick the next triplet while ensuring that you never compare the same sessions twice.

Each comparison results in three 'games': A vs B, B vs. C, and C vs. A. The result of each game can be a win, loss, or draw. Each result changes the session's rating. The exact amount depends on the other session's current rating (as defined by the Elo ranking system). Our K-factor ranges from 40 at the start to 10 towards the end of the evaluation.

Your evaluators will have to compare and rank three sessions at a time. Once they're done ranking the first three sessions, chosen at random, they'll be presented with the next three sessions to rank, and the process will continue until all sessions are compared at least once. Then, as determined by the Elo rating system, we take a look at the current standings of all of the sessions and use that information to decide which batch of three sessions your evaluators should compare next.

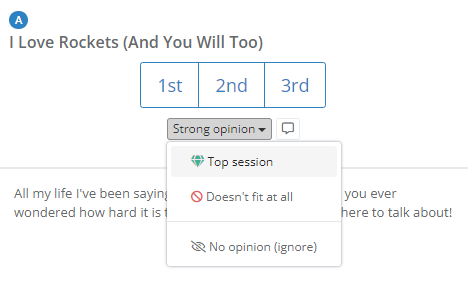

If they particularly like or dislike a certain session or have absolutely no opinion on some of them, they can use the Strong opinion option to express such views and further help the algorithm provide an accurate final rating, as well as speed up the evaluation process.

The Strong opinion options are:

- Top session

- Doesn't fit at all

- No opinion (ignore)

Before long, you'll have your final session ranking, faster than you'd ever be able to reach it with any other evaluation plan.

Please note that due to the nature of the algorithm used, the Comparison mode has the following restrictions:

- Once you open the evaluation plan, you can no longer add new sessions to your event

- Evaluators can't change their minds and go back to ranking the same three sessions they've already ranked

Also worth noting is that the progress bar in comparison mode isn't always progressing at the same pace. Ratings come not only from direct comparisons but also from indirect ones across different sessions. If the evaluations show less variety (more sessions with the same points), the percentage increase might slow down or in extremely rare situations even go backward. However, it’s likely to pick up speed in the right direction later on, and you'll still end up reaching the result faster than with any other comparison mode.

The beauty of the Comparison evaluation mode is that your evaluators don't have to think about anything other than ranking the three sessions presented to them. Not having to worry about any other submitted session keeps them completely focused on the task at hand.

This approach is exceptionally beneficial when there are a lot of sessions to evaluate, and that's where the Comparison evaluation mode truly shines.

Comparison table

To get a clear idea of the differences between all three available evaluation modes, here's a direct table comparison of their key features.

| Stars rating | Yes/No | Comparison | |

|---|---|---|---|

| Recommended number of sessions in the evaluation plan | < 200 | Any, but you'll get low-quality results | > 100 |

| Recommended number of evaluators | Any | Any | 2+ |

| Evaluation speed | Average | Fast | Fast |

| Quality of results | Medium | Low | High |

| Evaluators have to think about being consistent | Yes | Yes | No |

| Can the evaluator go back and change their mind? | Yes | Yes | No |

| Can new sessions be added after the evaluation has started? | Yes | Yes | No |

| Can the evaluator add comments during an evaluation? | Yes | Yes | Yes |